Core42 launches gpt-oss access with choice of GPUs

Core42 offers gpt-oss models delivering up to 3,000 tokens per second

#UAE #cloudAI - Core42, a UAE-based leader in sovereign cloud and AI infrastructure has launched global access to OpenAI's open-weight models gpt-oss-20B and gpt-oss-120B through its sovereign AI Cloud platform, delivering industry-leading inference speeds of up to 3,000 TPS (tokens per second) per user. The deployment enables enterprises, researchers and developers to access OpenAI's latest models through the Core42 Compass API with an option of AMD, Cerebras, Nvidia or Microsoft Azure platforms. All UAE hosted deployments run under UAE jurisdiction, allowing local regulated enterprises to run real-time AI applications, whilst maintaining compliance for sectors such as healthcare, finance and national security.

SO WHAT? - With the launch of gpt-oss models on Core42 AI Cloud, organisations in the UAE can have fast, scalable access to OpenAI’s latest open-weight models via a controlled sovereign deployment. Meanwhile, offering an exceptional speed of up to 3,000 (TPS) tokens per second per user, Core42 provides access to the new open-weight models as a globally competitive service.

Here are some key points about the Core42 announcement:

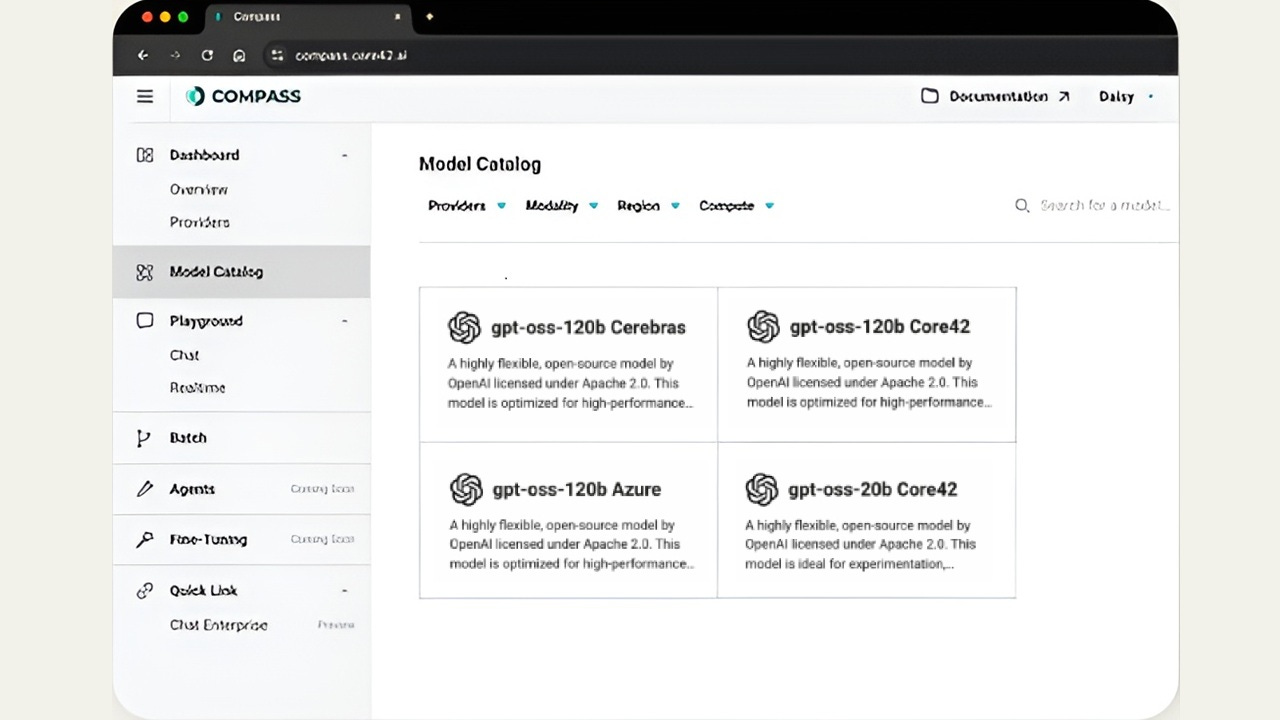

Core42, a UAE-based leader in sovereign cloud and AI infrastructure has launched global access to OpenAI's open-weight models gpt-oss-20B and gpt-oss-120B through its sovereign AI Cloud platform, with Compass API integration.

Corporate users can choose between AMD, Cerebras, Nvidia or Microsoft Azure services, to align each workload with the optimal accelerator speed and price-performance.

Core42’s gpt-oss 120B Cerebras deployments offers an incredible 3,000 (TPS) tokens per second per user on Cerebras clusters - for real time AI at scale.

Core42 provides sovereign-ready scalability for UAE organisations with full in-country deployment controls supporting regulated sectors including healthcare, finance and national security.

The Compass API enables organisations to access open-weight models with flexibility to choose optimal silicon platforms, whilst maintaining transparency, fine-tuning capabilities and sovereign deployment options.

The new OpenAI open-weight models can be run on Core42 platforms for enterprise-scale capabilities such as advanced automation, decision-making and real-time AI experiences.

Core42’s two largest high-performance computing (HPC) systems were recently ranked by the TOP500 list as 32nd and 33rd in the world.

ZOOM OUT - Released last week, OpenAI's new gpt-oss models advance open-source AI via a mixture-of-experts architecture. The gpt-oss-120B contains 117B total parameters, whilst activating only 5.1B parameters per token for efficient processing. These models leverage reinforcement learning techniques derived from OpenAI's most sophisticated internal systems including o3, delivering reasoning performance comparable to proprietary alternatives. Released under the Apache 2.0 licence, the models enable flexible deployment across edge devices to enterprise infrastructure, supporting democratised access to advanced language model capabilities.

[Written and edited with the assistance of AI]

LINKS

gpt-oss model page (OpenAI)

gpt-oss code (GitHub)

gpt-oss 120B (Hugging Face)

gpt-oss 20B (Hugging Face)