TII launches world’s most powerful SLMs under 13B parameters

Lightweight Falcon AI models run on laptops, with multimodal models coming in 2025

#UAE #LLMs– Technology Innovation Institute (TII), the global applied research centre of Advanced Technology Research Council (ATRC), has unveiled Falcon 3, a groundbreaking series of small language models (SLMs). Trained on 14 trillion tokens - more than double the number used to train its predecessor - Falcon 3 sets new global benchmarks for efficiency and performance, running on light infrastructures, including laptops and single GPUs. The new Falcon 3 series include four AI model sizes, Falcon3-1B, -3B, -7B and -10B, which are all available as open-source code via the Hugging Face community website.

SO WHAT? – Technology Innovation Institute (TII) aims to redefine small AI model capabilities with the Falcon 3 release, enabling businesses and individuals to access advanced AI solutions without requiring heavy infrastructure. The new series of small language models follows the release of the Falcon 2 series of models in May. The May 2024 release included two smaller, less resource-hungry models: Falcon 2 11B, which was trained on 5.5 trillion tokens; and Falcon 2 11B VLM, TII’s first multimodal LLM with new vision-to-language model (VLM) capabilities. This week’s release of Falcon 3 builds on Falcon foundation model and doubles down on the development of powerful, smaller models to deliver exceptionally powerful SLMs.

Here are some key details about the announcement:

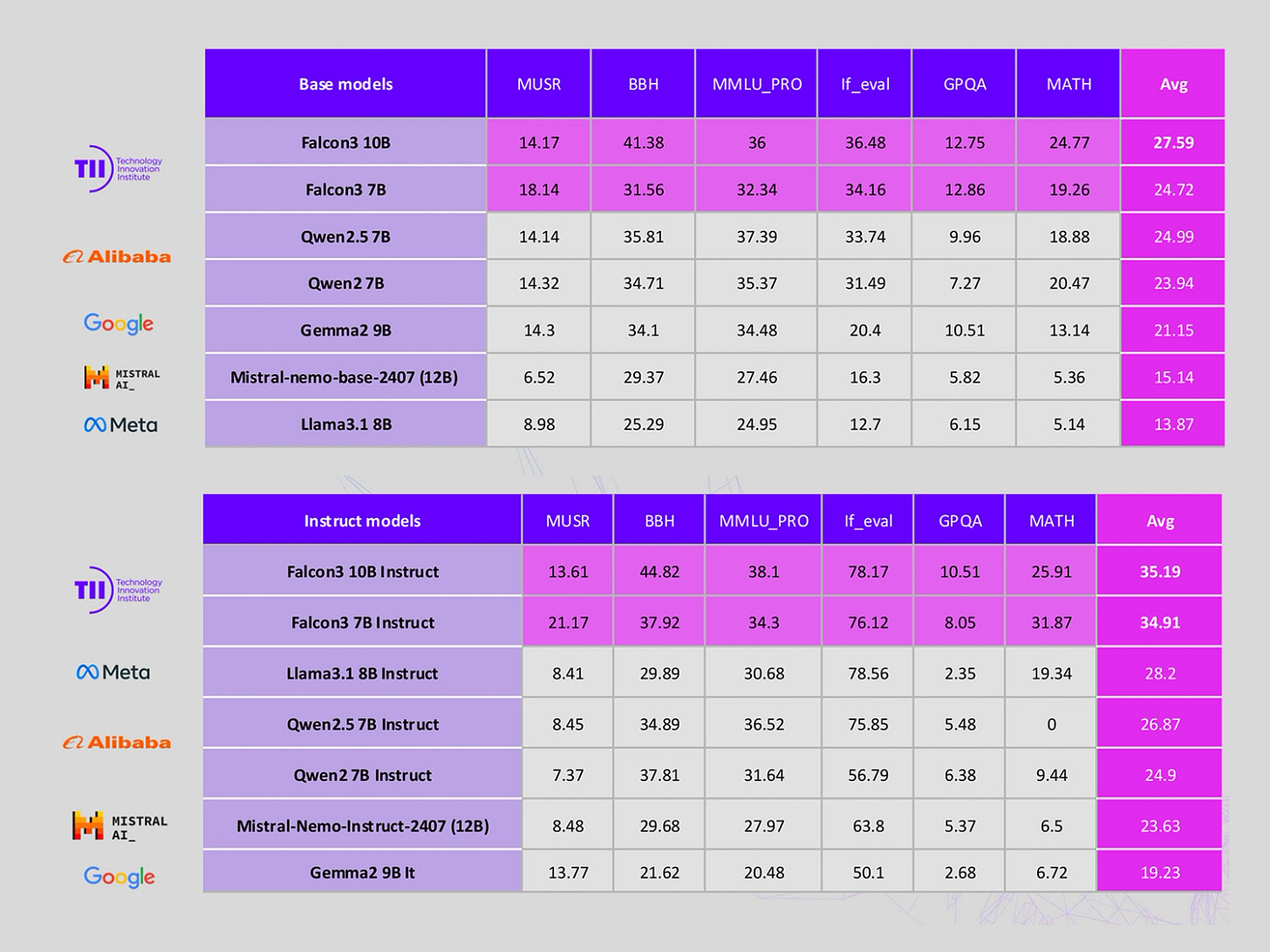

Technology Innovation Institute (TII) has released Falcon 3, a groundbreaking series of small language models (SLMs). Trained on 14 trillion tokens, Falcon 3 demonstrates superior performance across various benchmarks in the sub-13B parameter category, able to run on laptops and single GPUs.

Falcon 3-10B now leads the Hugging Face leaderboard for models under 13 billion parameters, surpassing open-source competitors, including Meta’s Llama variants.

Falcon 3 comes in four scalable model sizes: 1B, 3B, 7B, and 10B. Models have both Base and Instruct variations to meet different user needs.

These new models operate efficiently on laptops, single GPUs, and light infrastructure, democratising access to cutting-edge AI technology.

Falcon 3 was trained on 14 trillion tokens, over twice the data volume of its predecessor, delivering enhanced performance in reasoning, code generation, and instruction following.

Falcon 3 supports English, French, Spanish, and Portuguese, expanding accessibility for global users.

Falcon 3 models are available for download on Hugging Face and FalconLLM.TII.ae, alongside benchmark data.

Quantised versions enable seamless integration into resource-constrained systems for fast deployment and inference.

Falcon 3’s testing environment allows researchers, programmers, and developers to experiment with Falcon 3 before full-scale implementation.

All models in the Falcon 3 family are available in variants such as Instruct, GGUF, GPTQ-Int4, GPTQ-Int8, AWQ, and 1.58-bit, offering flexibility for a wide range of applications.

The TII team have also enhanced the Falcon Mamba 7B, originally released in August, by training it on an additional 2 trillion data tokens, resulting in Falcon3-Mamba-7B-Base, improving reasoning and mathematical capabilities.

From January 2025, the Falcon 3 model series will include text, image, video, and voice capabilities, broadening its real-world applications, with the introduction of additional models in the series.

ZOOM OUT – The release of Falcon 3 underscores the TII’s leadership in AI innovation and its strategic focus on democratising access to the most advanced AI models. By developing less-resource hungry models, TII stands to grow a bigger ecosystem, encouraging more developers, and more enterprise users to use Falcon. Coming a little more than 6 months after the release of Falcon 2, the delivery of the new smaller, more powerful models demonstrates TII’s commitment to keep developing Falcon’s capabilities at pace. This will not go unnoticed by enterprise users or the developer community, and bringing TII’s goal of creating a future de facto LLM standard another step closer.

LINKS

Falcon 3 (Falcon website)

Falcon 3 open-source space (Hugging Face)

Read the Falcon 3 blogpost (Github)

Read more about Falcon large language models:

TII launches Falcon's first SSLM (Middle East AI News)

TII debuts multimodal Falcon 2 Series (Middle East AI News)

Could Falcon become the Linux of AI? (Middle East AI News)

Abu Dhabi launches new global AI company (Middle East AI News)

Disrupt or be disrupted (Middle East AI News)

Can UAE-built Falcon rival global AI models? (Middle East AI News)