Groq opens EMEA's largest AI compute centre in Saudi Arabia

The new GroqCLoud region is powered by 19,000 of the company's LPUs

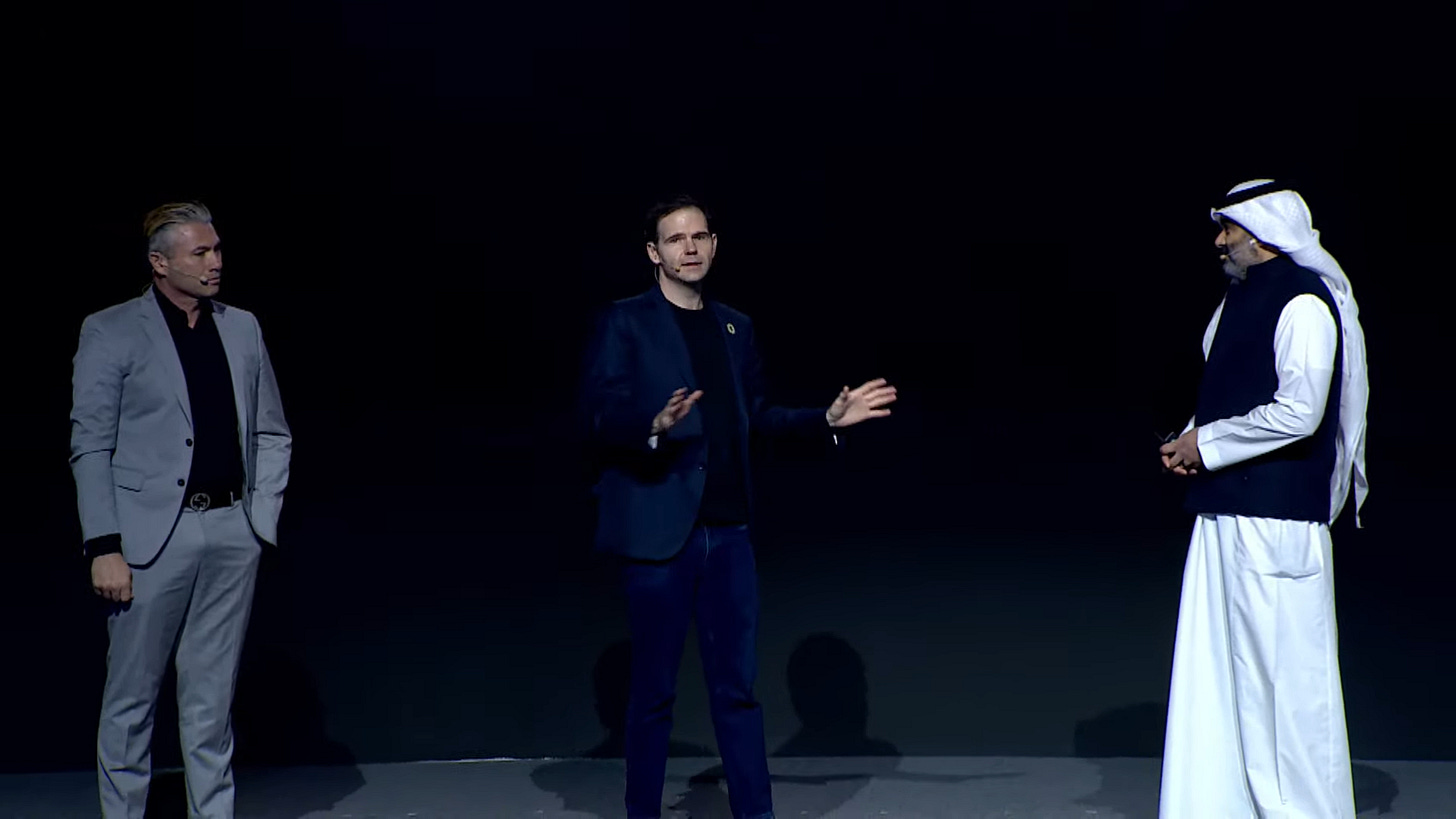

#Saudi #LEAP25 - Jonathan Ross, CEO of Groq today announced the opening of the AI inference leader’s second GroqCloud region and Europe, Middle East and Africa’s largest AI inferencing center in Dammam, backed by $1.5 billion investment in Saudi Arabia. Speaking on the main stage of LEAP 2025 in the company of Tareq Amin, former CEO of Aramco Digital and H.E. Abdullah Alswaha Minister of Communications and Information Technology, Ross confirmed that the GroqCloud is now live running on 19,000 Groq LPUs (language processing units). The new AI infrastructure hub was built in partnership with Aramco Digital and with the financial backing of Aramco . Amin emphasised that the GroqCloud service was not being priced at a premium and Saudi Arabia now has the lowest cost for inferencing AI models in the world.

SO WHAT? - The debut of Groq’s AI inferencing cloud region in Saudi Arabia, marks the first time that a public cloud region has been launched to serve international users beyond the Middle East. It’s also noteworthy that while some other vendors are making plans to offer the latest AI cloud compute services in the region, Groq has now deployed cutting edge AI infrastructure, at scale, offering the fastest inferencing service available anywhere in the world. The new hub will reduce latency significantly for users of GroqCloud in Saudi Arabia and across the region.

Here are some more key details about Groq’s new AI infrastructure hub:

At LEAP 2025 today, Aramco Digital and Groq announced the opening of Europe, the Middle East and Africa’s largest AI compute infrastructure hub in Dammam, Saudi Arabia. The announcement was made by Tareq Amin, former CEO of Aramco Digital, and Jonathan Ross, CEO & Founder of Groq on the LEAP25 main stage, hosted by H.E. Abdullah Alswaha Minister of Communications and Information Technology of Saudi Arabia.

The new AI inference centre hosts Groq’s second GrogCloud region globally, which is powered by 19,000 LPUs (language processing units).

Groq is building high-performance AI infrastructure designed to serve over 4 billion people across Saudi Arabia, the Middle East, Africa, and beyond. The AI inferencing centre project is backed by $1.5 billion in combined Aramco and Groq investment.

Tareq Amin, then CEO of Aramco Digital, and Jonathan Ross, CEO & Founder of Groq, announced a new partnership between the two companies to build the word’s largest AI compute centre at LEAP in March 2024.

In September 2024 during SDAIA’s Global AI Summit (GAIN), Groq announced that it would provide 20% to 40% of its on-demand token-as-a-service for AI inference via a new Saudi inferencing data centre in Saudi Arabia.

According to Ross, Groq will deploy at least 25 million tokens-per-second of compute by the end of Q1 2025. Meanwhile, Aramco Digital and Groq noted at GAIN that the new inferencing centre could be expanded to provide one billion tokens-per-second of compute compacity.

In December, Groq loaded up several Boeing 747 jets with a massive shipment of LPUs (Language Processing Units) and GroqRacks bound for Saudi Arabia. The shipment contained hundreds of GroqRacks housing tens of thousands of LPUs.

Aramco Digital and Groq teams worked though the 2024-2025 holiday season to build and configure Groq systems for the new AI inferencing centre onsite in Dammam, Saudi Arabia.

Earlier this month, Groq announced the appointment of Fahad AlTurief as Vice President & Managing Director, MENA, based at Groq’s new regional headquarters in Saudi Arabia.

ZOOM OUT - The significant and sustained growth in trained AI models moving into production environments, has led to fast growing demand for inference. Since launching GroqCloud in March last year, more than 800,000 developers worldwide have joined the real-time inference platform using thousands of applications running on the LPU™ Inference Engine via the Groq API. The platform promises real-time inferencing with lower latency and greater throughput than its competitors, mainly for use with generative and conversational AI applications. Groq developed the LPU, or language processing unit, for faster inference, co-locating compute and memory on the same chip and so eliminating resource bottlenecks.

Read more about Groq’s new AI compute hub in Saudi Arabia:

Groq ships to Saudi to for huge AI infrastructure cluster (Middle East AI News)

Groq to offer on-demand services from Saudi Arabia (Middle East AI News)