Khalifa University, UAEU release first open 6G AI benchmark

Open-source LLM benchmark tests 22 AI models across 30 tasks

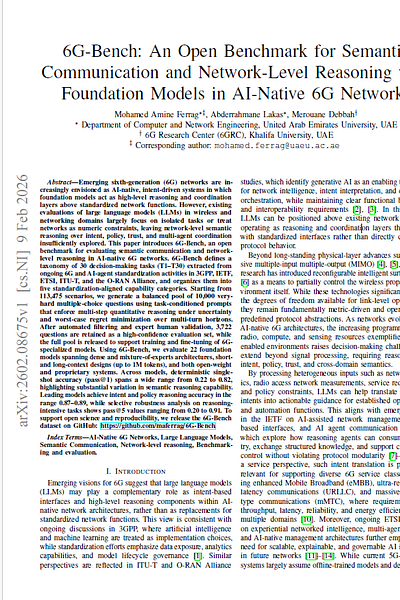

#UAE #LLMs – The 6G Research Centre of Abu Dhabi-based university Khalifa University and the Department of Computer and Network Engineering at UAE University have released 6G-Bench, the first open benchmark designed to evaluate semantic communication and network-level reasoning with foundation models in AI-native 6G networks. The benchmark features 10,000 multiple-choice questions derived from 113,475 scenarios, with 3,722 expert-validated questions testing 30 decision-making tasks. The research evaluated 22 contemporary foundation models, revealing accuracy rates ranging from 22.8% to 82.9%, with mid-scale models offering the strongest balance between accuracy, robustness, and deployability whilst trust, security, and distributed intelligence tasks remain the most challenging. The benchmark is aligned with telecom standards from 3GPP, IETF, ETSI, ITU-T, and the O-RAN Alliance.

SO WHAT? – The researchers have open-sourced the complete benchmark infrastructure for 6G-Bench, including the dataset, task definitions and taxonomy, evaluation scripts, and documentation. Therefore, the release enables any model builder or researcher to assess LLMs for 6G deployment in a reproducible way, either locally or via APIs. This project aims to addresses the telecom industry's longstanding challenge of fragmented AI evaluation, where proprietary benchmarks and vendor claims have made it difficult to compare models objectively. So, the 6G-Bench framework allows network operators, equipment manufacturers and research institutions to independently verify whether foundation models possess the semantic reasoning capabilities required for intent-driven, policy-aware 6G networks before committing to deployment.

Here are some key points regarding 6G-Bench:

Khalifa University’s 6G Research Centre and the Department of Computer and Network Engineering at UAE University have released 6G-Bench, the first open benchmark designed to evaluate semantic communication and network-level reasoning with foundation models in AI-native 6G networks.

The benchmark organises 30 decision-making tasks into five capability categories: intent and policy reasoning, network slicing and resource management, trust and security awareness, AI-native networking and agentic control, and distributed intelligence and emerging 6G use cases.

Researchers constructed questions through a task-conditioned pipeline enforcing very-hard difficulty levels, multi-step quantitative reasoning under uncertainty, and worst-case regret minimisation over multi-turn decision horizons.

The evaluation suite includes 22 foundation models spanning code-specialised and general-purpose systems, multimodal and long-context architectures supporting up to one million tokens, dense and mixture-of-experts designs, and both open-weight and proprietary models.

Leading models achieved intent and policy reasoning accuracy clustered between 87% and 89%, whilst selective robustness on reasoning-intensive tasks reached 91.6% accuracy under pass@5 evaluation metrics.

The benchmark is grounded in ongoing standardisation activities from major telecommunications bodies, positioning large language models as reasoning and coordination layers that interact with standardised interfaces rather than directly controlling protocol behaviour.

Results indicate that mid-scale foundation models currently deliver the most favourable balance for deployment, whilst trust-aware, security-critical, and distributed intelligence tasks represent the key bottlenecks requiring further architectural innovation.

The 6G-Bench research team included: Mohamed Amine Ferrag (UAE University), Abderrahmane Lakas (UAE University), Merouane Debbah (Khalifa University).

ZOOM OUT – The 6G-Bench release follows Khalifa University’s October 2025 announcement of GSMA Open-Telco LLM Benchmarks 2.0, developed with the GSMA Foundry community and 15 mobile network operators including AT&T, Deutsche Telekom, Orange, Telefónica and Vodafone. That earlier benchmark focused on current 5G network operations across configuration generation, troubleshooting and standards interpretation, whilst 6G-Bench extends evaluation into future network architectures requiring intent-driven reasoning, policy awareness and distributed intelligence. The initiatives position Khalifa University’s 6G Research Centre as a key contributor to global telecommunications AI standards.

[Written and edited with the assistance of AI]

LINK

6G-Bench code (GitHub)

DOWNLOAD

Read more about telecom AI Models

Khalifa University announces telecom benchmarks (Middle East AI News)

Telecom industry partners develop Arabic Telecom LLM (Middle East AI News)

GSMA releases telecom AI benchmarks (Middle East AI News)

New leaderboard to support development of telecom LLMs (Middle East AI News)

Testing phase begins for TelecomGPT (Middle East AI News)