MBZUAI releases K2 V2 open-source reasoning model

Fully transparent AI model release includes training data and code

#UAE #Reasoning - Abu Dhabi AI research university Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) has released K2 (Version 2), a 70-billion-parameter open-source frontier model designed for advanced reasoning, with full transparency including weights, training code, data composition, mid-training checkpoints and evaluation frameworks. Developed by MBZUAI’s Institute of Foundation Models, the model rivals open-weight leaders in its size class, outperforming Qwen2.5-72B and approaching Qwen3-235B performance. The new model is explicitly built for deep reasoning, long-context processing up to 512,000 tokens, and native tool use beyond typical chatbot functions.

The K2 V2 model is released under MBZUAI’s 360-open approach, publishing complete pre-training corpus composition, mid-training datasets including the TxT360-Midas reasoning corpus, supervised fine-tuning data, training logs, hyperparameters and infrastructure details for full reproducibility.

SO WHAT? - According to MBZUAI, the K2 frontier-scale system is intentionally built to be examined, extended and improved in public with full lifecycle documentation. For industry teams, K2 provides a reasoning-ready foundation that makes domain adaptation and continuous training far more predictable than working with black-box systems where capabilities and training decisions remain opaque. For researchers, the model offers an unprecedented testbed to study fundamental questions about chain-of-thought training, reinforcement learning-based reasoning, safety divergence and long-context mechanisms.

Here are some key facts about the new K2 V2:

Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) has released K2 V2 via its Institute of Foundation Models. The 70-billion-parameter dense transformer model has been explicitly built for advanced reasoning, placing it in the same class as Qwen2.5-72B whilst demonstrating stronger reasoning capabilities through dedicated mid-training design.

The model achieves 55.1 percent on GPQA-Diamond graduate-level science benchmark rising to 69.3 percent after supervised fine-tuning, 93.6 percent on GSM8K with structured reasoning prompts, 94.7 percent on MATH dataset, and matches DeepSeek-R1 and o3-mini-high performance at 83 percent on Knights and Knaves-8 People logic puzzles.

K2 V2 undergoes three distinct training phases including pre-training for breadth and fluency, mid-training to infuse long-context skills up to 512,000 tokens and explicit reasoning behaviors, and supervised fine-tuning to enable assistant capabilities whilst leaving capacity for future reinforcement learning.

The mid-training phase assembled over 250 million unique mathematics problems with synthesized multi-step solutions, alongside reasoning behaviors covering dual-process analysis, planning, data science exploration and stepwise instructions across more than 100 prompt templates grounded in real user queries.

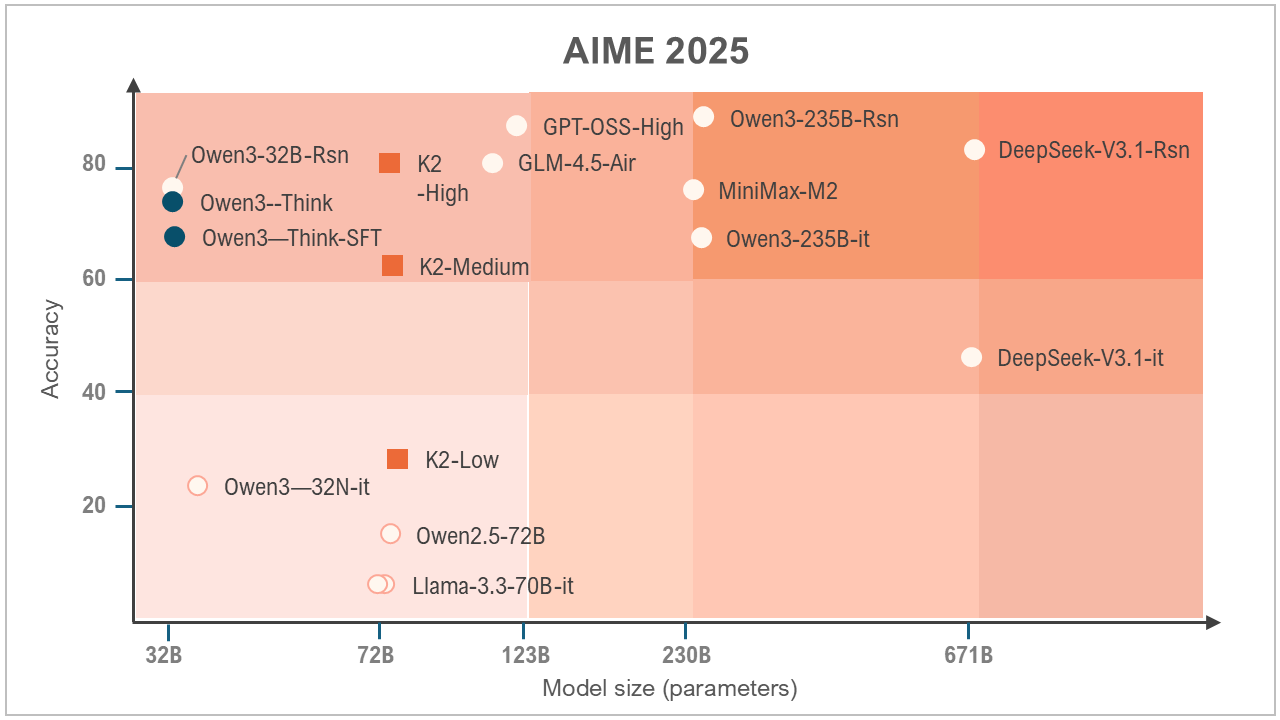

MBZUAI introduces three reasoning effort modes—low, medium and high—controlling thinking token generation before answering, with high effort delivering strongest results on difficult tasks whilst low effort provides cost-effective reasoning with only hundreds of additional tokens boosting answer quality dramatically.

On Berkeley’s Function Calling Leaderboard v4, K2-Medium scores 52.4 overall, beating Qwen2.5-72B and Llama-3.3-70B in function-calling tasks, particularly excelling in multi-turn interactions requiring state maintenance and parameter filling across back-and-forth messages.

Safety evaluation across 72 adversarial stress tests shows K2 V2 produces safe or appropriately refusing responses approximately 86 per cent of the time, with performance above 95 per cent on chemistry, biology, financial compliance and social harms categories, though the model remains vulnerable to evolving jailbreak patterns.

ZOOM OUT - The K2 release builds upon MBZUAI’s earlier model K2-65B (released 2024) and its breakthrough K2 Think, a 32-billion-parameter reasoning model unveiled in September 2025. That smaller 32B model demonstrated that advanced reasoning capabilities don’t necessarily require massive computational resources, outperforming flagship reasoning models 20 times its size. The model led all open-source models in mathematics performance on AIME ‘24/’25, HMMT ‘25 and OMNI-Math-HARD benchmarks. K2 Think achieved unprecedented throughput of 2,000 tokens per second on Cerebras wafer-scale systems, establishing MBZUAI’s position in efficient AI development.

[Written and edited with the assistance of AI]

LINKS

K2 V2 Think Data (Hugging Face)

K2 V2 Code (Github)

K2-V2 Evaluation Code (Github)

K2 V2 technical report (LLM360)

K2 Think (website)

Download for iOS (added 27-Jan-26)

Download for Android (added 27-Jan-26)

K2 V2 blogpost (MBZUAI)

Read more about MBZUAI open source initiatives:

K2 Think 32B rivals reasoning models 20 times its size (Middle East AI News)

UAE President backs UAE-made AI reasoning model (Middle East AI News)

LLM360 project empowers pre-trainers (Middle East AI News)

Powerful open-source K2-65B LLM costs 35% less to train (Middle East AI News)

New framework for open-source LLMs (Middle East AI News)

Love this take the focus on open reasoning models like K2 V2 is super important for how we teach and develop AI.