Research could dramatically improve tokenisation of Arabic data sets

OpenBabylon research reduces tokenisation cost and improves AI model quality

#USA #tokenisation - Californian AI startup OpenBabylon has developed an innovative and comprehensive approach to improving the efficiency of tokenisation for bilingual large language models (LLMs) for English and underrepresented languages, such as Arabic. The model-agnostic approach to developing bilingual LLMs that support languages using non-Latin character sets, such as Arabic, Georgian and Ukrainian, proved to enhance language performance and reduce computational costs by up to three times. Additionally, the research proposes new metrics for evaluating language quality.

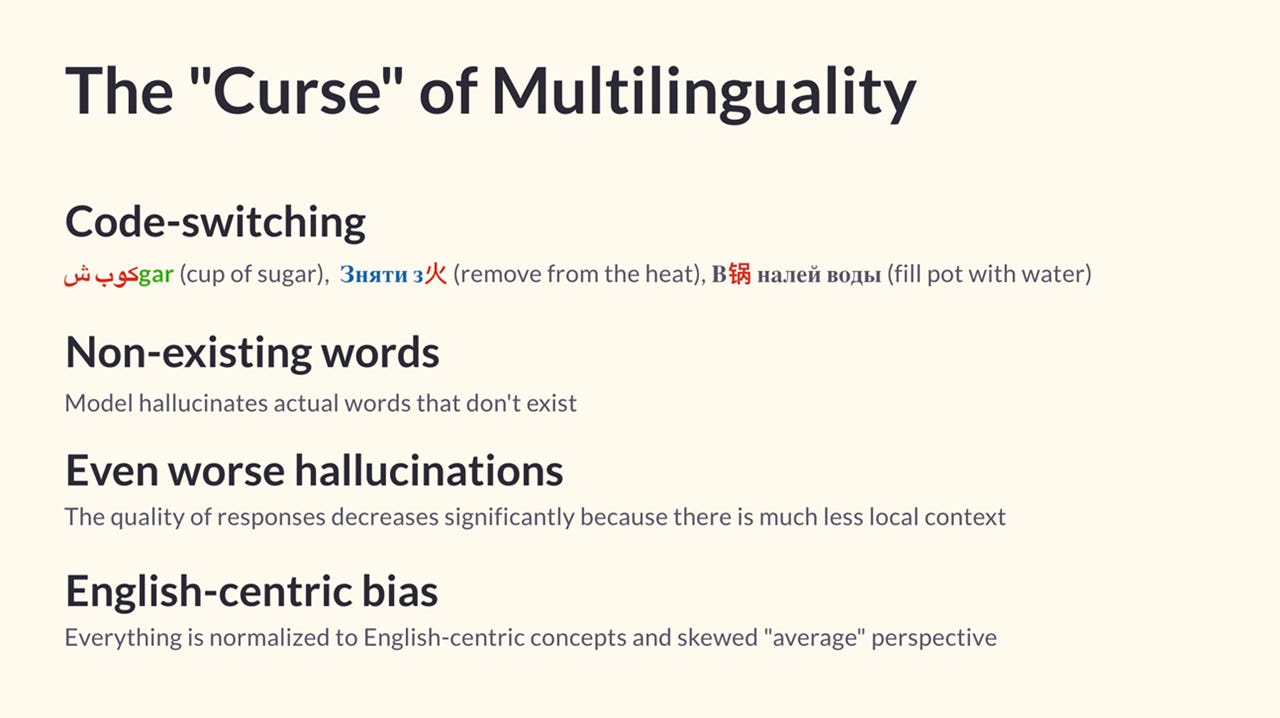

SO WHAT? - The root problem that impacts both Arabic LLM quality and the cost of building Arabic models is the relative scarcity of quality, well-rounded data sets in the language. Much of the Arabic language data available is poor quality and some types of language data are more abundant than others. This makes both choosing the right data sets and the techniques used for tokenising data for model training all the more critical for developing Arabic language models. The new methodology from OpenBabylon creates a model training pipeline that the researchers say, maximises the value derived from the training data, whilst improving processing efficiency and lowering computational costs. Experimentation demonstrated improvements in grammar accuracy and language preservation, and achieving near-elimination of inappropriate code-switching, a common issue in standard AI models.

Here are some key points about the new research.

OpenBabylon, a California-based language-focused AI company, has introduced a new pre-training methodology to build more cost-effective bilingual large language models (LLMs) for English and underrepresented languages, overcoming major tokenisation challenges.

The new research paper from OpenBabylon (with contributions from 13 researchers), titled From English-Centric to Effective Bilingual: LLMs with Custom Tokenizers for Underrepresented Languages, reveals an innovative and comprehensive approach to data tokenisation.

By creating custom tokenisers, OpenBabylon significantly reduces the cost of processing languages that use non-Latin characters like Arabic, Georgian, and Ukrainian, while preserving linguistic accuracy.

Experimental research using Arabic, Georgian, and Ukrainian language data, \demonstrates a threefold reduction in computational costs for processing non-English languages while maintaining high language quality.

The methodology extends token vocabulary, introduces new embeddings, and optimizes training to mitigate penalization of non-English languages.

OpenBabylon’s research also introduces new evaluation metrics to measure language quality, showing that a larger vocabulary improves grammar accuracy and reduces errors such as code-switching.

Tokenisation advancements showed a 90% reduction in non-existing word generation and a significant decrease in inappropriate code-switching between languages.

Testing on the FLORES-200 dataset, OpenBabylon's model displayed improved language quality metrics like tokenizer fertility, non-existing words ratio, and grammar correctness scores, emphasizing robust language processing.

Key partners in the tokenisation research project include Amazon Web Services (AWS), Google for Startups, Hot Aisle Inc., NVIDIA, and Observea

OpenBabylon’s research paper was supported by extensive academic collaboration, including contributions from the Arab Center for Research and Policy Studies and the Doha Graduate Studies University, enriching Arabic language contextual understanding.

ZOOM OUT - As with most cutting-edge technologies, developing large language models in Arabic lags behind development in English. Although an increasing number of Arabic language models are being developed, when it comes to developing a pipeline for training, fine-tuning and evaluation, research teams tend to adopt quite different approaches. No doubt the open-source initiatives that have shared new approaches to creating data pipelines over the past year are valuable. However, most LLM and NLP researchers would agree that more research and experimentation is needed to create more effective bilingual Arabic models. Therefore, the work of OpenBabylon could represent a critical step in helping to improve the fluency and accuracy of future Arabic LLMs.

LINKS

Download the research paper (arXiv)

Read more about Arabic LLMs:

MBZUAI launches multimodal Arabic AI benchmark (Middle East AI News)

Tarjama& launches Pronoia LLM for business Arabic (Middle East AI News)

MBZUAI-led team builds Moroccan Arabic AI models (Middle East AI News)

Arabic LLM index launched at GAIN (Middle East AI News)