Khalifa University unveils breakthrough RF AI model

Researchers create first foundation model to process radio frequency spectrograms

#UAE #6G #LLMs – Abu Dhabi-based Khalifa University’s Digital Future Institute has released RF-GPT, the first radio-frequency language model that integrates RF spectrograms** into a multimodal model, enabling AI systems to process, understand and reason over wireless signals in natural language. The breakthrough model addresses a fundamental gap in telecoms AI, where existing language models process only text and structured data. This means that conventional RF deep-learning models have to be built separately for signal-processing tasks. RF-GPT is designed to bridge the gap between RF perception and high-level reasoning.

SO WHAT? – RF-GPT represents a turning point, from reliance on siloed signal-processing pipelines, to instruction-driven, multi-task RF intelligence. In the new scenario, a single model will be able to classify modulations, detect overlaps, extract the 5G NR parameters that define network characteristics and explain its reasoning via prompts. This could ultimately negate the need to deploy separate neural networks per task. By making the physical layer ‘queryable’ in natural language, operators will be able to ask questions such as “Are these overlapping 5G and WLAN signals compliant?" The model also creates a pathway to closed-loop, AI-native radio control where RF perception feeds directly into network optimisation and policy decisions. This capability could prove to be transformational for the development of future AI-native 6G networks, effectively allowing the networks to manage themselves.

Here are some key points about the new RF-GPT model

Abu Dhabi-based Khalifa University’s Digital Future Institute has announced a breakthrough RF-GPT model. This first-of-its-kind radio-frequency language model integrates RF spectrograms into a multimodal model, enabling AI systems to process, understand and reason over wireless signals in natural language.

RF-GPT converts radio signals into images, then teaches an AI to read and explain those images.

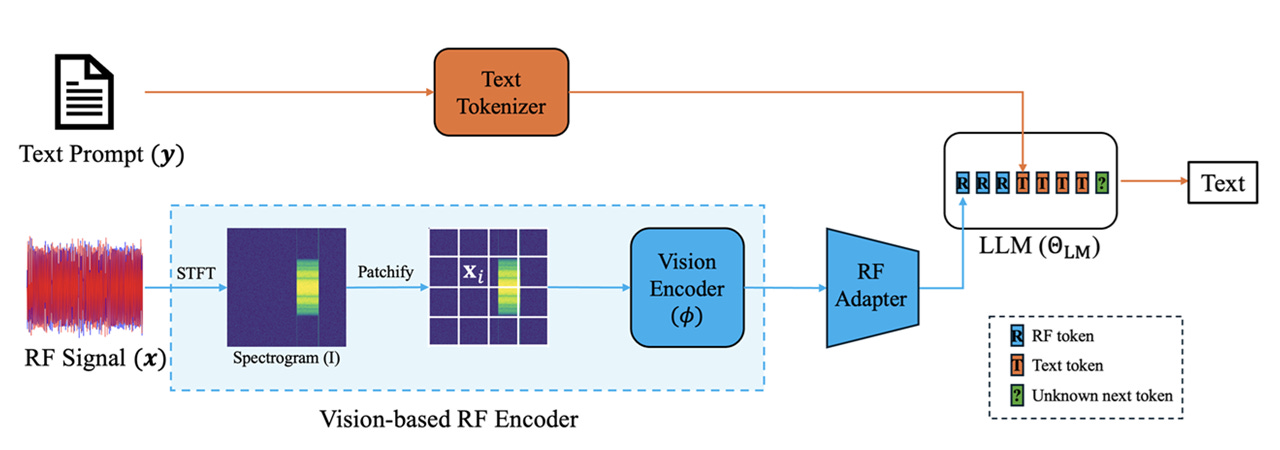

In detail: RF-GPT processes complex in-phase/quadrature* waveforms by mapping them to time-frequency spectrograms passed to pretrained visual encoders, with resulting representations injected as RF tokens into a decoder-only large language model that generates RF-grounded answers, explanations and structured outputs.

The model outperformed OpenAI’s GPT-4 and Alibaba’s Qwen 2.5 in benchmark testing by the research team across wideband modulation classification, overlap analysis, wireless technology recognition, WLAN user counting and 5G NR information extraction.

The research team trained the AI on 625,000 synthetically generated radio signal examples, eliminating the need for expensive manual data labelling.

In detail: To overcome the lack of RF-text model training data, the researchers built a large synthetic spectrogram-caption dataset using realistic waveform generators for six wireless technologies including 5G NR, LTE, UMTS, WLAN, DVB-S2 and Bluetooth, producing approximately 12,000 RF scenes and 625,000 instruction examples without any manual labelling.

The model introduces scalable synthetic pretraining for RF foundation models, demonstrating how RF models can scale in a similar way to vision or language foundation models, potentially reducing the cost and time of deploying intelligent spectrum management systems across 5G, WLAN, satellite and integrated sensing and communications applications.

Primary end-users will include telecom operators and network systems teams for RF monitoring, optimisation, interference analysis and troubleshooting in live networks, alongside defence and national security organisations for spectrum awareness and contested-environment RF understanding.

Spectrum regulators and spectrum authorities can deploy RF-GPT for compliance monitoring, spectrum governance, cross-technology coexistence analysis and evidence-based policy, whilst industrial AI and sensing teams can leverage RF-aware intelligence for multimodal scene understanding and localisation.

Khalifa University’s newly formed Digital Future Institute develops intelligent ICT platforms and networked systems for telecom, digital infrastructure, energy, climate and security, builds open-source and commercial foundation models for UAE sectors and accelerates AI deployment through funded vertical projects and spin-offs.

The RF-GPT research team included: Hang Zou, Yu Tian, Bohao Wang, Lina Bariah, Samson Lasaulce, Chongwen Huang, and Merouane Debbah

How does it work? - RF-GPT processes radio signals through a four-stage pipeline that converts invisible electromagnetic waves into language model outputs. First, complex in-phase and quadrature waveforms are transformed into spectrograms, converting raw RF signals into 512x512 pixel images where modulation patterns, resource block allocations and inter-signal overlaps become visually structured data. Then, a pretrained Vision Transformer partitions each spectrogram into 14x14 patches producing 1,369 RF tokens, which a lightweight linear projection adapter maps into the language model embedding space. Finally, a decoder-only Transformer language model generates natural language analysis conditioned on these RF tokens, receiving them exactly as it would receive standard image tokens in a vision-language model without requiring architectural modifications.

The decision to use a vision encoder rather than an audio-style tokeniser is deliberate and technically significant. Unlike speech, radio signals carry critical information in their two-dimensional time-frequency structure, making image-processing techniques far more effective than audio-processing approaches. Notably, the entire model was trained on just eight GPUs in a matter of hours, demonstrating that extending AI into new physical modalities need not require extraordinary computational resources.

ZOOM OUT – In practice, RF-GPT could enable an AI system to perceive and reason over the invisible ocean of radio waves saturating every urban environment. It would identify multiple coexisting wireless technologies, modulation schemes, user counts and standard compliance anomalies from a single spectrogram query in plain language. Scaled across thousands of RF-aware AI agents deployed across a network, the technology could enable real-time spectrum monitoring, autonomous access negotiation, unauthorised transmission detection and cross-band coordination. All this would be accomplished via natural language interfaces that human operators and higher-level AI agents can query and override, representing the physical-layer intelligence envisioned for AI-native 6G networks.

The immediate beneficiaries for RF-GPT and its successors will be telecom operators requiring interference analysis and troubleshooting in live networks. The AI model also has applications for defence and national security organisations needing spectrum awareness in contested environments, spectrum regulators requiring evidence-based compliance monitoring, and RAN engineers integrating RF reasoning into network automation stacks.

* Quadrature refers to the mathematical process of finding the area of a plane shape or computing definite integrals.

** Spectrograms are two-dimensional time-frequency images computed via Short-Time Fourier Transform

[Written and edited with the assistance of AI]

LINKS

RF-GPT research paper (arXiv)

Technical blog post (LLM4Telecom)

RF-GPT code (only on request to the Digital Future Institute)

Read more about other telecom AI research from Khalifa University:

UAEU, KU release first open 6G AI benchmark (Middle East AI News)

Khalifa University announces telecom benchmarks (Middle East AI News)

Telecom industry partners develop Arabic Telecom LLM (Middle East AI News)

GSMA releases telecom AI benchmarks (Middle East AI News)

New leaderboard to support development of telecom LLMs (Middle East AI News)