Llama 4 now live via Middle East GroqCloud service

Llama 4 debuts in Middle East region via GroqCloud

#SaudiArabia #LLMs– Groq has gone live with the new Llama 4 model via its Middle East GroqCloud™ in real time after Meta announced its latest advanced large language models (LLMs). According to Groq, its Dammam-based AI inferencing centre is the first in the Middle East to provide access to the new models. Available immediately to developers, the Llama 4 run on Groq’s high-speed AI infrastructure hosted in Saudi Arabia. This launch gives the region day-zero access to Llama 4 Scout, marking a first for the Arab world. Llama 4 Maverick went live via GroqCloud on Wednesday April 9th.*

SO WHAT? – The race to make frontier AI models more accessible is intensifying, and platform exclusives like this one are sought-after differentiators. Groq’s new AI infrastructure in the region gives the Middle East first-mover advantage for Llama 4, at a time when local AI adoption is accelerating. Groq’s ability to provide high-performance AI inference that runs models faster, and at a low price point, will no doubt encourage developers, AI labs and enterprises to begin using Llama 4 with immediate effect.

Here is some more information about the arrival of Llama 4.

Groq has announced that Meta’s Llama 4 is now available exclusively via GroqCloud™ in the Middle East. Llama 4 Scout was live last weekend, while Llama 4 Maverick was made available on April 9th.*

Meta announced the latest versions of its multimodal AI models on Saturday, bringing new levels of performance for processing and integrating various types of data across text, video, images and audio.

GroqCloud offers developers immediate access with no cold starts, no model tuning required, and consistent high-speed inference.

Llama 4 is features Mixture of Experts (MoE) architecture and native multimodality.

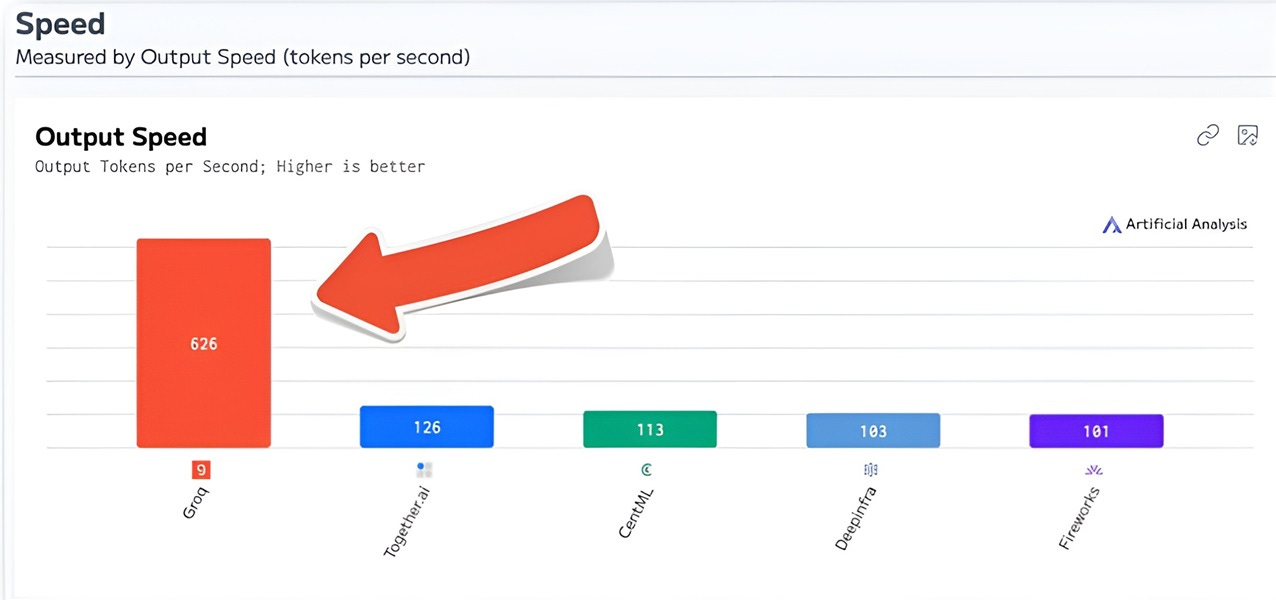

Llama 4 Scout (17Bx16E): a strong general-purpose model, ideal for summarisation, reasoning, and code. Scout runs at over 625 tokens per second on Groq.

Llama 4 Maverick (17Bx128E): A larger, more capable model optimised for multilingual and multimodal tasks, is great for assistants, chat, and creative applications. Maverick supports 12 languages, including Arabic.

GroqCloud pricing starts at $0.11 per million input tokens for Scout, with Maverick is available at a blended rate of $0.53 per million tokens.*

The service is powered by Groq's custom-built LPUs, enabling high-speed inferencing at over 625 tokens per second.

Groq's regional cloud infrastructure is located in Dammam, Saudi Arabia, where the company has built the largest AI inferencing centre in EMEA. The AI hub is backed by Aramco Digital and has been fully operational since February 2025.

GroqCloud access is available through GroqChat, Groq API and Groq’s web console, with a free tier for developers.

This rollout supports Saudi Arabia’s ambitions to build digital capability and reduce reliance on external cloud AI providers.

ZOOM OUT – Groq’s launch of its second global GroqCloud region in Dammam earlier this year marked a major milestone in the company’s international expansion. Backed investment from Aramco Digital, the new hub is now the largest AI inferencing centre across Europe, the Middle East and Africa. The infrastructure is designed to serve over 4 billion people across the Middle East, Africa and beyond, positioning Saudi Arabia as an epicentre for high-speed, low-cost AI compute. It was further announced this year that Aramco would provide $1.5 billion in funding for the future expansion of Groq’s Ai inferencing services.

* Updated 09-Apr-25 to reflect current model availability.

[Written and edited with the assistance of AI]

Read more about Groq:

Groq opens EMEA's largest AI compute centre in KSA (Middle East AI News)

Groq ships to Saudi to for huge AI infrastructure cluster (Middle East AI News)

Groq to offer on-demand services from Saudi Arabia (Middle East AI News)